- Ian's Newsletter

- Posts

- Thinking About Macro vs. Sector Bubbles & Systemic Risk

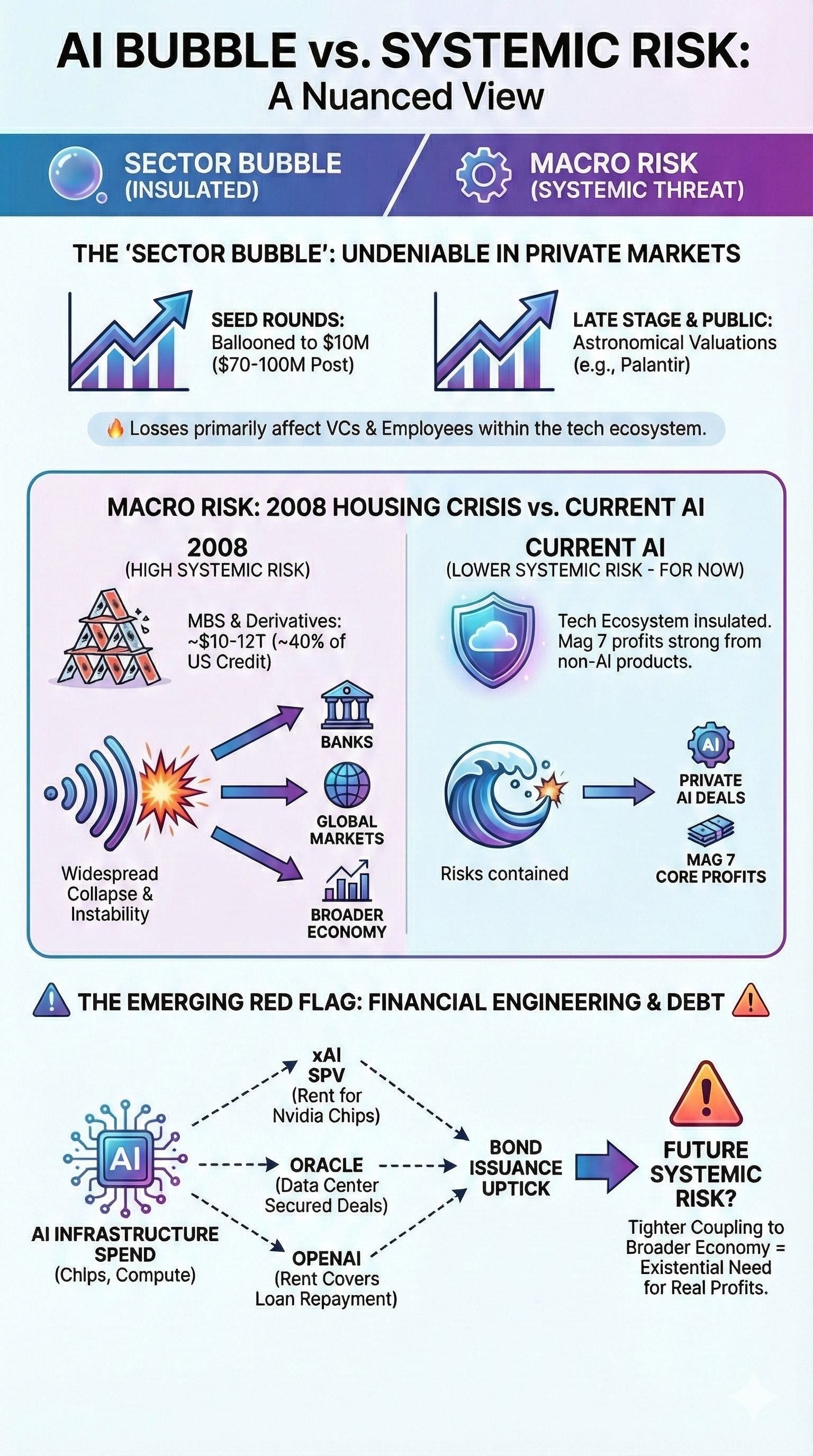

Thinking About Macro vs. Sector Bubbles & Systemic Risk

This was practically impossible to make with AI 6 months ago. (Made with Google’s Nano Banana 3 Pro.)

For my more public-market-inclined friends, the talk of the fall hasn't been the new AI models from Google, OpenAI, and Anthropic—or anything on the product side, really. It's about whether we're in a new bubble, driven by astronomical AI spend on infrastructure, compute, and talent, while profitability remains an open question.

Remember: there's a lot of money and careers riding on either outcome. I tend toward optimism on bubbles now—not because I think valuations are rational, but because I've come to focus on systemic risk and whether bubbles ripple out into other sectors or stages.

You'd be insane to think we're not in a private-market AI bubble—the most specific, narrow definition of a bubble we can confidently draw boundaries around. It's happening in early stage (seed rounds have ballooned to $10 million on $70–100M post-money) and in late stage, even public markets if you count Palantir's valuation.

The thing is, bubbles happen all the time now. The internet and social media have democratized information-sharing, and it's easier than ever to rally a group behind a specific thesis. That's why it feels like bubbles are being created, popping, and getting created again almost instantaneously.

Every technology has an R&D phase and an "applications" phase. I don't mean apps on your phone—I mean the application of the technology to real problems. And yes, we're lagging there, for a variety of non-technology reasons: internal politics, regulation, plain fear of new tools. But I think it's disingenuous to frame this as "AI doesn't work, full stop." It's closer to "AI isn't working for X, Y, and Z—yet."

The real work is taking this tech and figuring out how it applies to different problems and roles. That's where the money will be made; that's where most people's energy should go. Of course it hasn't happened yet. The future is still being built.

If you base life or company decisions on the AI-bubble narrative, you'll end up more confused than when you started. The fact is: AI product development is happening faster than ever; applied AI is still lagging on use cases and ROI; but from a technology standpoint, this is the worst the tech will ever be—and it's improving faster than anyone anticipated.

A useful frame for the gap between technology progress and capital-markets progress: Sequoia's June 2024 blog post, "AI's $600B Question." Has anything really changed on the capital side since then? Not much—just more debt facilities (which we'll get into below). It's more of the same. Whereas from a product perspective, 18 months ago feels like five years. The same month as that blog post, Google launched Gemini 1.5 Pro and Gemini 1.5 Flash; Anthropic released Claude 3.5 Sonnet. The month after, OpenAI pushed out GPT-4o Mini. Those are now several major releases behind. (If you don't believe me, here's an infographic I made using Google’s new Nano Banana 3 Pro model, which came out this week.)

If a tree falls in the woods, does it make a sound? In the same vein: if a bubble pops in a small ecosystem, does it really matter?

Systemic risk is a concept in finance—the quantifiable probability that an event can trigger widespread collapse or instability across a company, industry, or economy.

It's an integral part of thinking about bubbles, especially whether they matter to the broader economy.

I first came across the term in Econ 101, right before I started my first hedge fund job. (I was telling this story recently and at this point, a friend interjected to call me "unc," which I didn't appreciate but is nonetheless accurate.) The question was whether MBS exposure was creating systemic risk to the U.S. economy. On the surface, it seemed like a real debate. But when you accounted for all the derivative and synthetic-derivative products built on top of the mortgage industry, the number ballooned to roughly $10–12 trillion by 2008—about 40% of the $25 trillion U.S. credit system. (More data from my Perplexity research here.)

As big as AI is, we're nowhere near that level of systemic risk—across private markets or tech overall. Most deals have stayed insulated inside the tech ecosystem, and the Mag 7 are still driving huge profits from their core non-AI products. So is this really that different from normal R&D spend?

Yes and no. It's pretty different from normal R&D if you're handing billion-dollar packages to individual engineers. But vendor financing, debt financing, and other creative structures? Not a great sign—but not a disaster, either.

The most significant financial concern is now around debt facilities and obligations. The WSJ has been tracking this most closely, and the numbers are getting larger, the deals more intricate—which usually isn't a good sign. Meta is doing deals where it can exit the position every four years but still guarantee investors get paid in full. xAI's investors Valor and Apollo are funding the purchase of 300,000 Nvidia chips through an SPV that xAI pays rent into. Oracle's deals are secured by data centers, and OpenAI's rent payments cover the loan repayments to the bank.

These are just a sampling. We're also seeing an uptick in bond issuance—another signal worth watching.

The higher the systemic risk, the worse an AI bubble pop would be for the U.S. economy. You almost want the bubble to pop while it's still insulated in cushy private markets, where losses are constrained to equity holders. (Sucks for VCs; really sucks for employees.) If the AI bubble and the broader economy become more tightly coupled—through these financing structures or other mechanisms—the need for real profits becomes existential.